Fill in Blanks

Home

1.1 Bivariate Relationships

1.2 Probabilistic Models

1.3 Estimation of the Line

1.4 Properties of the Least Squares Estimators

1.5 Estimation of the Variance

2.1 The Normal Errors Model

2.2 Inferences for the Slope

2.3 Inferences for the Intercept

2.4 Correlation and Coefficient of Determination

2.5 Estimating the Mean Response

2.6 Predicting the Response

3.1 Residual Diagnostics

3.2 The Linearity Assumption

3.3 Homogeneity of Variance

3.4 Checking for Outliers

3.5 Correlated Error Terms

3.6 Normality of the Residuals

4.1 More Than One Predictor Variable

4.2 Estimating the Multiple Regression Model

4.3 A Primer on Matrices

4.4 The Regression Model in Matrix Terms

4.5 Least Squares and Inferences Using Matrices

4.6 ANOVA and Adjusted Coefficient of Determination

4.7 Estimation and Prediction of the Response

5.1 Multicollinearity and Its Effects

5.2 Adding a Predictor Variable

5.3 Outliers and Influential Cases

5.4 Residual Diagnostics

5.5 Remedial Measures

5.2 Adding a Predictor Variable

"The greatest value of a picture is when it forces us to notice what we never expected to see." - John Tukey

We have seen that plotting the residuals vs a predictor variable in Section 3.2.1 is one way to determine if a linear relationship in the simple linear regression model is adequate.

If the points in this plot were not just randomly scattered about zero, then a model incorporating the nonlinear relationship may be needed.

Likewise, we can plot the residuals vs each predictor variable in multiple regression. Again, this shows if alinear term for that predictor variable is adequate in our model or if nonlinear term is needed.

We can also plot the residuals vs a potential predictor variable that isnot already in the model. Any systematic pattern in this residual plot indicates that potential $X$ variables may be useful in modeling $Y$.

A residual plot vs a potential $X$ is limited in that it may not show themarginal effect of adding that variable when all other variables are already added. To see the marginal effect, we will need a different plot.

If the points in this plot were not just randomly scattered about zero, then a model incorporating the nonlinear relationship may be needed.

Likewise, we can plot the residuals vs each predictor variable in multiple regression. Again, this shows if a

We can also plot the residuals vs a potential predictor variable that is

A residual plot vs a potential $X$ is limited in that it may not show the

To see the marginal importance of a potential $X_{j}$ on modeling

$Y$, given the other predictor variables that are already in the

model, we can regression $X_{j}$ on all the predictor variables already

in the model.

We then find the residuals of this fit which we denote as $e_{X_{j}|{\bf X}}$.

We now plot the residuals from the model involving $Y$ (regressed on the predictor variables not including $X_{j}$) against $e_{X_{j}|{\bf X}}$.

This plot is called anadded variable plot. It is also sometimes called

a partial regression plot or an adjusted variable plot.

From the added variable plot we can see the marginal importance of $X_{j}$ in reducing the variability remaining after regression on the other predictor variables. If the plot shows the points in a linear pattern with a nonzero slope, then $X_{j}$ may be helpful in explaining more variability in $Y$ in addition to the variables already included.

We may also see a systematic but nonlinear patter in the points. This means $X_{j}$ maybe helpful in the model but a nonlinear term is needed.

We then find the residuals of this fit which we denote as $e_{X_{j}|{\bf X}}$.

We now plot the residuals from the model involving $Y$ (regressed on the predictor variables not including $X_{j}$) against $e_{X_{j}|{\bf X}}$.

This plot is called an

From the added variable plot we can see the marginal importance of $X_{j}$ in reducing the variability remaining after regression on the other predictor variables. If the plot shows the points in a linear pattern with a nonzero slope, then $X_{j}$ may be helpful in explaining more variability in $Y$ in addition to the variables already included.

We may also see a systematic but nonlinear patter in the points. This means $X_{j}$ maybe helpful in the model but a nonlinear term is needed.

Let's examine the bodyfat data once again.

library(tidyverse)

library(car)

dat = read.table("http://www.jpstats.org/Regression/data/BodyFat.txt", header=T)

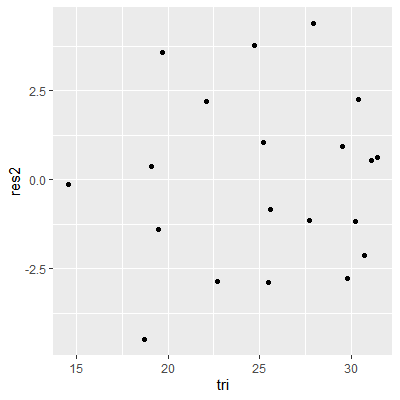

#suppose we start with just thigh in the model

fit2 = lm(bfat~thigh, data=dat)

dat$res2 = fit2 %>% resid()

#plot the residuals versus the potential variable tri

ggplot(dat, aes(x=tri, y=res2))+

geom_point()

#this plots shows no systematic pattern so a nonlinear term is

#not needed. However, does tri add explain anything more about

#Y given thigh is already included

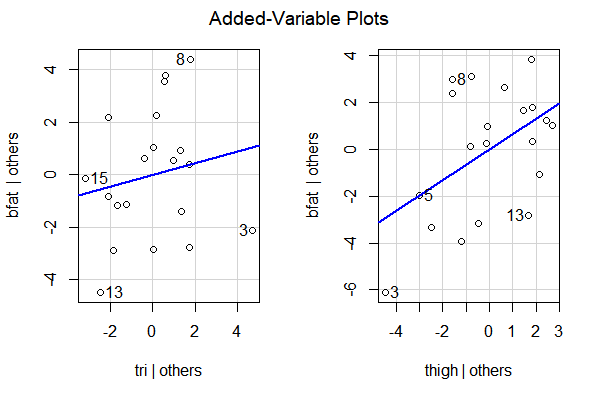

#fit the model with both

fit12 = lm(bfat~tri+thigh, data=dat)

#give the added variable plots for all variables

#in the fit

avPlots(fit12)

# It appears adding thigh when tri is already included helps more

# than including tri when thigh is already included. This is

# because the plots show thigh with a steeper slope and less

# spread about the line